Table of Contents

Early Methods of Recording Information

The journey of data storage begins long before the digital age, rooted deeply in human history.

Maybe one of the great example is Library of Alexandria.

It was founded in the early 3rd century BCE in Alexandria, Egypt by Ptolemy I Soter, a successor of Alexander the Great.

The library was part of a larger research institution called the Musaeum of Alexandria.

Scholars estimate that at its peak, the library contained anywhere from 40,000 to 400,000 scrolls.

One of the library’s main goals was to collect all the world’s knowledge. It is said that any ship that docked in Alexandria was inspected for scrolls, which were then copied by official scribes. The original scrolls were kept in the library, and the copies were given back to the owners. This aggressive acquisition strategy significantly contributed to the library’s extensive collection.

However, the Library of Alexandria also serves as a cautionary tale about the vulnerabilities of centralized information storage. It is believed to have suffered multiple incidents of destruction over centuries, with the most significant loss attributed to a fire that purportedly consumed a vast portion of its invaluable manuscripts. The precise events surrounding the library’s decline are shrouded in mystery and the subject of much historical debate, but the symbolism is clear: the loss of the Library of Alexandria is a potent reminder of the fragility of knowledge when stored in a singular location.

This historical lesson underscores a timeless principle in data preservation: the importance of redundancy. Just as the ancients learned the hard way that centralizing valuable information in one place is inherently risky, so too have modern data storage strategies evolved to mitigate similar risks. Today, we understand that to preserve information effectively, it is crucial to maintain multiple copies in diverse locations and formats.

Or simply “not to put all your eggs in one basket !!!“

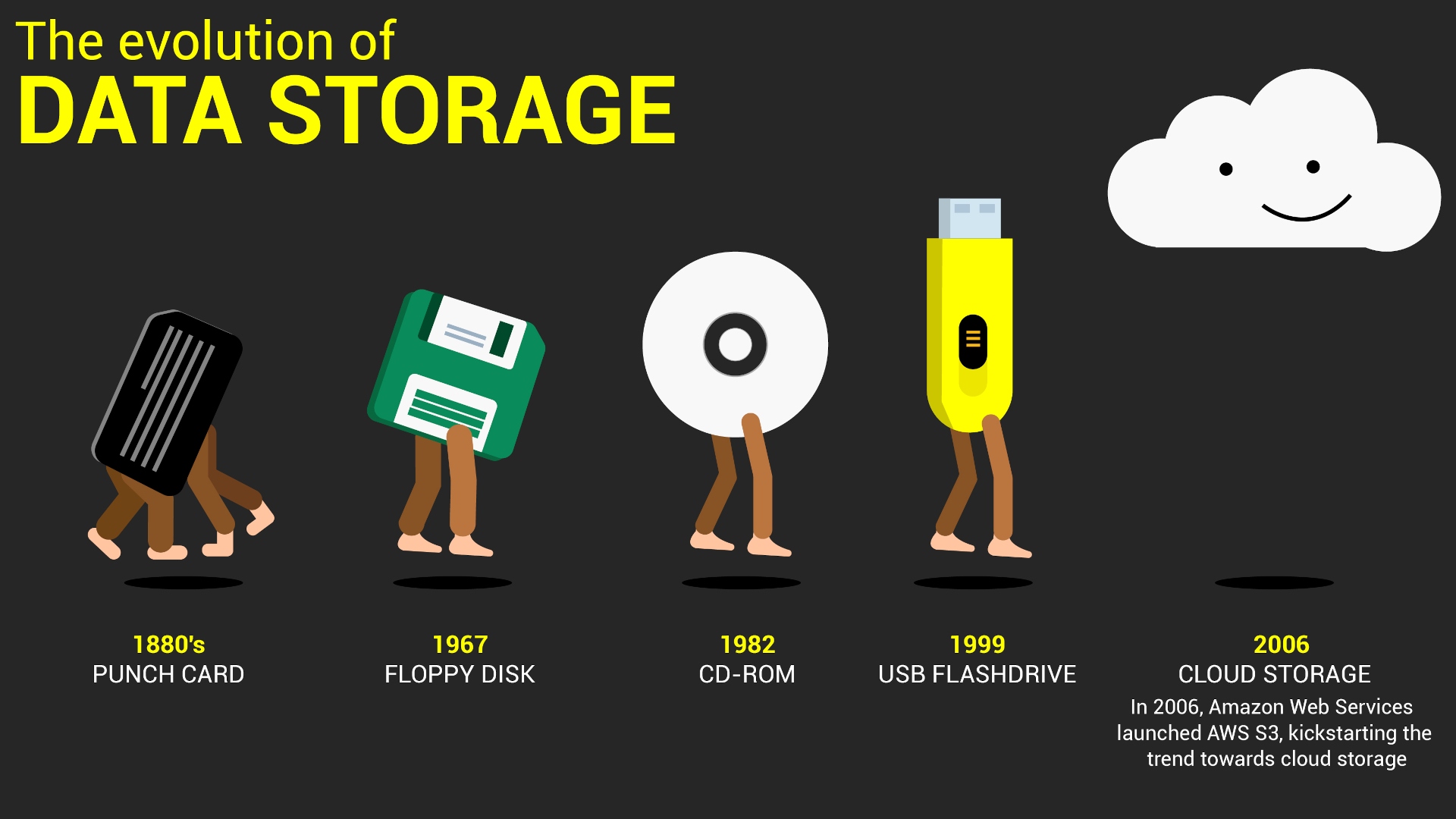

Punch Cards

Lets jump directly into 1890.

Punch cards, also known as punched cards or perforated cards, emerged as a pioneering method for data storage and processing, marking a significant milestone in the evolution of information technology. Developed in the early 18th century for controlling textile looms, punch cards first found their modern utility in data storage in the 19th century with the work of Herman Hollerith.

The purpose of punch cards was to store information in a physical format that machines could read. Each card contained a series of holes punched in specific positions to represent data. The advantage of this system was its simplicity and the speed with which machines could process these cards, significantly reducing the time and labor required for data handling tasks compared to manual methods.

Punch cards were indeed successful, becoming a staple in data processing for over a century. They were used extensively in a variety of applications, from census data collection to controlling machinery and managing business operations. Their reliability and ease of use made them an indispensable tool in the early days of computing and data management.

However, punch cards also had their limitations. The physical nature of the cards meant they were prone to wear and tear, and exposure to environmental factors could damage them, leading to data loss. Additionally, the process of punching the cards was labor-intensive and prone to human error. The storage capacity of punch cards was also limited, requiring large volumes of cards to store substantial amounts of data, which in turn created challenges in storage and organization.

Despite these challenges, the legacy of punch cards is significant. They laid the groundwork for subsequent developments in data storage and processing technologies.

Electro-Mechanical Storage

Electro-mechanical storage systems marked a significant evolution in the realm of data storage, introducing a more dynamic and versatile approach to storing and accessing information. These systems, emerged in the mid-20th century, leveraged both electrical and mechanical components to enhance data storage and retrieval processes beyond what was possible with purely mechanical devices like punch cards.

One of the earliest and most notable examples of electro-mechanical storage is the magnetic drum memory, Invented in 1932 and widely used in the 1950s. These drums were cylinders coated with a magnetic recording material where data could be stored in the form of magnetized spots. The introduction of electro-mechanical storage solved several limitations associated with punch cards, offering significantly faster data access times and the ability to easily modify stored information without the need for physical handling or replacement of cards.

Despite their revolutionary impact, electro-mechanical storage systems were not without their challenges. Durability was a concern, as the mechanical parts of these systems were prone to wear and tear over time, potentially leading to data loss or the need for frequent maintenance. Additionally, the size and cost of these early storage systems limited their accessibility and practicality for smaller businesses or personal use.

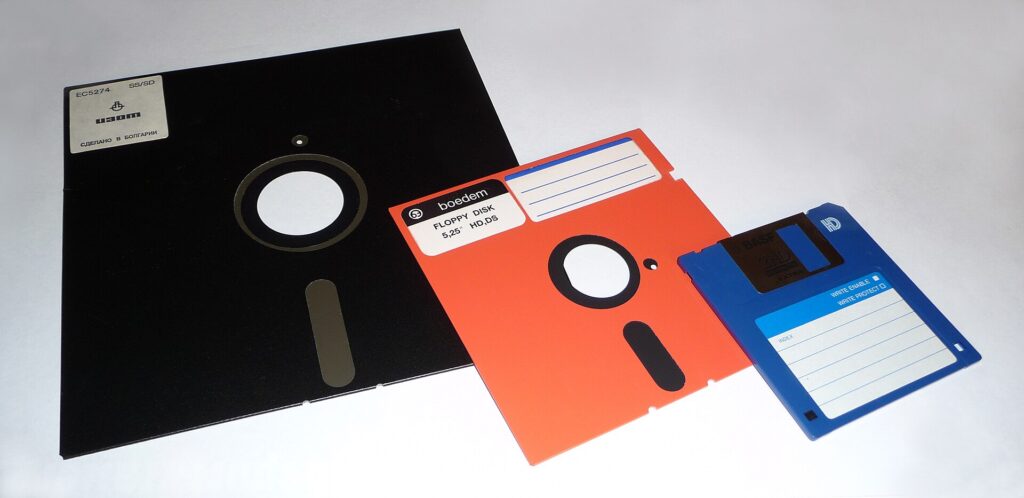

Floppy Disks

Floppy disks were a groundbreaking technology in the history of personal computing, serving as a primary medium for data storage and transfer from the late 1960s through the early 2000s. Their development was pivotal in making personal computing more versatile and accessible, enabling users to easily share and transport data.

Floppy disks came in several sizes and formats, with the most common being the 8-inch, 5.25-inch, and 3.5-inch disks. The 8-inch disks were the first to be introduced, but it was the 5.25-inch and later the 3.5-inch disks that became staples in the personal computing world. The 3.5-inch disks, in particular, were notable for their rigid plastic casing and metal shutter, offering improved durability and protection for the magnetic disk inside.

Not only that they became staples in the personal computing world, they are the “Save” icon even today, more than 20 years after they become obsolete.

Compatibility was an important consideration with floppy disks, especially as different sizes and formats were introduced. The 5.25-inch disks, popular in the 1980s, gradually gave way to the 3.5-inch disks in the 1990s, necessitating that computers be equipped with drives capable of reading the newer format. Operating systems like DOS and Microsoft Windows provided the necessary software support to work with these various disk formats, ensuring that users could access their data across different machines.

Despite their widespread use, floppy disks were not without issues. Their limited storage capacity, which ranged from 360 KB on the earliest 5.25-inch disks to 1.44 MB on the standard 3.5-inch disks, became increasingly insufficient as file sizes grew. Moreover, floppy disks were susceptible to physical damage, magnetic interference, and data corruption, leading to potential data loss. The need for greater reliability and larger storage capacities eventually led to the decline of floppy disks, as they were supplanted by optical media like CDs and DVDs, and later by USB flash drives and cloud storage solutions.

Floppy disks, while now largely obsolete, hold an important place in computing history. They symbolized the era of personal computing expansion, making digital storage and data transfer accessible to the masses. Their legacy is a testament to the rapid pace of technological advancement and the continuous quest for better, more reliable data storage solutions.

Optical Media

Optical media, encompassing CD (Compact Disc), DVD (Digital Versatile Disc), HD-DVD (High Density Digital Versatile Disc), Blu-Ray, and Mini CD formats, revolutionized data backup, storage, and distribution in the late 20th and early 21st centuries. These storage mediums became popular due to their significant capacity increase over floppy disks, durability, and portability, making them ideal for a wide range of applications including software distribution, music and video playback, and data archiving.

The popularity of optical media stemmed from its ability to store large amounts of data in a compact, easy-to-use format. CDs, for instance, could hold up to 700 MB of data, while DVDs expanded this capacity to up to 4.7 GB per layer. HD-DVD with 15 GB per layer and 30 GB 2 layer variant. Blu-Ray discs further extended the storage capacity, offering up to 25 GB per layer and some of them having 3-4 layers (100-128 GB). This made optical media an excellent choice for distributing multimedia content, software, and for conducting data backups that required more space than floppy disks could provide.

Optical media was also touted for its perceived durability and longevity. Advertisements often highlighted these discs as near indestructible, resistant to data loss from magnetic interference—a common problem with magnetic storage media like floppy disks and tapes. One famous advertisement featuring Bill Gates illustrated the vast storage capacity of CDs by suggesting that a single CD could store as much information as could be printed on a mountain of paper.

However, the early days of writable CDs saw compatibility issues with the “+” and “-” format distinctions (e.g., CD+R vs. CD-R). These differences were not in the physical disc itself but in the recording standards and compatibility with disc readers and writers. This confusion sometimes led to problems where a disc might not be readable by all drives, especially older models.

Despite their advantages, optical media was not without flaws. The discs could be scratched or damaged, affecting their readability. Moreover, the claim of being indestructible was quickly challenged by real-world use; while they were more durable than floppy disks, CDs and DVDs could still suffer from data degradation over time, known as “disc rot,” especially if not stored properly.

Hard Disk Drives

Hard Disk Drives (HDDs) have been a cornerstone of data storage technology since their inception in the 1950s. The evolution of HDDs over the decades is a testament to the relentless pursuit of higher storage capacities, faster access times, and more compact and efficient designs. From the early models storing just a few megabytes to today’s drives that can hold up to 20 TB, HDDs have continuously evolved to meet the growing demands of data storage.

Initially, HDDs were massive devices, occupying entire rooms, but they have since shrunk to the compact 3.5-inch and 2.5-inch form factors commonly used in desktops and laptops, there is even 1.8-inch for early “netbooks” and small 7.9-11-inch laptops. This reduction in size did not come at the expense of capacity; rather, technological advancements have allowed for an exponential increase in data storage capacity.

The interface standards for connecting HDDs to computers have also evolved, from the Parallel ATA (PATA or IDE) to the more advanced Serial ATA (SATA) and then to network-based interfaces like iSCSI, which facilitates data transfer over LANs, WANs, and the Internet. This progression has not only improved data transfer rates but also expanded the versatility of HDDs in various computing environments.

One significant milestone in the evolution of HDD technology was the enablement of Network Attached Storage (NAS) devices. NAS devices are dedicated file storage systems that provide data access to a network of users. The development of more compact, high-capacity, and network-compatible HDDs made it possible to create NAS solutions that are accessible, efficient, and scalable, catering to both home users and businesses.

Disadvantages and Challenges

Despite their advancements, HDDs have faced criticism for their power consumption, especially in large data centers where they can be a significant part of the energy footprint. An active HDD can consume between 6 to 15 watts and up to 50 when they initialy spinup. This power demand increases with the use of multiple drives, highlighting the importance of energy efficiency in modern storage solutions.

Furthermore, HDDs are mechanical devices with moving parts, making them more susceptible to physical damage and wear over time compared to solid-state drives (SSDs). The spinning disks and moving read/write heads can also result in longer access times and lower speeds compared to the flash memory technology used in SSDs and Flash Drives.

Flash Drives and Memory Cards

The introduction of flash drives and memory cards marked a significant turning point in the realm of personal and portable data storage. Unlike their predecessors, these devices utilized solid-state memory, offering a compact, durable, and efficient means of storing and transferring data. The universal serial bus (USB) standard played a crucial role in the widespread adoption of flash drives, providing a standardized interface for connecting these devices to computers and other electronic equipment, thus ensuring their compatibility across a wide range of devices.

The USB standard, introduced in the mid-1990s, revolutionized the way devices connect and communicate with computers. Flash drives, benefiting from USB’s plug-and-play capability, quickly became a popular medium for data storage and transfer, appreciated for their convenience, speed, and reliability. Similarly, memory cards, such as SD (Secure Digital) cards, found their place in digital cameras, smartphones, and gaming consoles, significantly enhancing the portability of data and media.

The impact of flash storage on gaming consoles was profound, allowing players to save game states, profiles, and downloadable content with ease. This capability transformed the gaming experience, enabling personalized gameplay and the ability to pick up exactly where one left off, a feature that was less practical with cartridge-based or internal storage systems.

The first mass backup options provided by flash drives revolutionized data portability and sharing, making it easier than ever for users to back up important documents, photos, and other personal data. This convenience paved the way for the widespread use of flash-based storage in a variety of devices, including smartphones, tablets, and ultimately, solid-state drives (SSDs).

Despite their advantages, the early days of flash storage were not without challenges. Initial capacities were relatively limited, and the cost per megabyte was significantly higher than that of magnetic storage solutions like hard disk drives (HDDs). Moreover, early flash drives and memory cards had slower write speeds and a limited number of write cycles, which could impact their longevity and reliability over time.

Solid-State Drives

The advent of Solid-State Drives (SSD) marked a revolutionary leap in storage technology. Unlike their mechanical HDD counterparts, SSDs store data on flash memory chips, leading to faster data access times, lower power consumption, and greater durability due to the absence of moving parts. This transition represented not just an evolution in storage mediums but a transformative shift in how computers perform and operate.

The initial adoption of SSDs was hindered by their high cost per gigabyte compared to HDDs. However, as manufacturing processes improved and the technology matured, prices have steadily decreased, making SSDs an increasingly viable option for both consumer and enterprise storage solutions.

Today, SSDs are the preferred choice (At least for now) for new laptops, desktops, and servers, offering a compelling blend of performance, reliability, and efficiency.

Despite their advantages, SSDs face challenges such as finite write cycles and data recovery complexities. Manufacturers continue to innovate to overcome these limitations, extending the endurance of SSDs and developing more sophisticated data management and error-correction algorithms.

NVMe

NVMe, or Non-Volatile Memory Express, represents a significant advancement in storage technology, primarily designed to maximize the strengths of solid-state drives (SSDs) over traditional SATA connections. As an interface, NVMe leverages the high-speed PCIe (Peripheral Component Interconnect Express) bus instead of the older SATA interface, which was originally optimized for slower hard disk drives (HDDs).

NVMe was developed as a response to the growing need for faster data access and processing speeds, a demand that SATA could no longer efficiently meet due to its limitations in bandwidth and latency. Introduced in 2013, NVMe was designed from the ground up to harness the speed of modern SSDs, significantly reducing data access times and improving overall system performance.

NVMe has rapidly become the standard for new storage devices, especially in environments where speed and low latency are critical, such as data centers, high-performance computing, and gaming. Its adoption is expected to continue growing, driven by the increasing demand for faster data processing and the continuous development of NVMe technology to address current limitations, such as improving power efficiency and heat management.

Cloud Storage

Cloud storage has emerged as a pivotal innovation in the realm of data management, fundamentally transforming how individuals and organizations store, access, and manage their digital assets. From its early beginnings to the sprawling ecosystems we see today, cloud storage represents a leap towards flexibility, scalability, and resilience in data handling.

The concept of cloud storage took a significant leap forward in 2006 when Amazon launched its Simple Storage Service (S3), a service that allowed users to store and retrieve any amount of data, at any time, from anywhere on the web. This was a groundbreaking moment, signaling the shift from traditional on-premises storage solutions to a more distributed, internet-based model. Amazon S3’s launch not only democratized data storage for businesses of all sizes but also set the stage for intense competition and innovation in the sector.

Following Amazon’s lead, tech giants like Google and Microsoft soon entered the fray with their cloud storage solutions—Google Cloud Storage and Microsoft Azure Blob Storage, respectively. These platforms offered similar functionalities, aiming to capture a slice of the rapidly growing market. Each service sought to differentiate itself through unique features, pricing models, and integration options with other cloud services.

Parallel to these commercial offerings, the open-source community responded with projects like OpenStack Swift, Ceph, and MinIO, providing organizations with the flexibility to deploy cloud storage solutions in their private clouds or hybrid environments. These open-source alternatives have been crucial for businesses wary of vendor lock-in or those with specific compliance or data sovereignty requirements.

Cloud storage offers several compelling advantages like Scalability, Accessibility, Cost-Effectiveness and most important is Resilience and Redundancy.

For the fist time in history we was able to easily and effortlessly store our data and knowledge in real-time on many servers, data centers and continents.

Despite its benefits, cloud storage is not without its challenges, The dependence on Internet Connectivity and Vendor Lock-in are still concerns almost 20 years after the first s3 bucket went online.